Teaching established software new tricks

15 October 2021 | By

For almost 20 years, the ATLAS Collaboration has used the Athena software framework to turn raw data into something physicists can use in an analysis. Built on top of the Gaudi framework, Athena was written to be flexible and robust, using configurable chains of “algorithms” to process both simulated and real data. This modularity has enabled Athena to be used for many different tasks, from simulating the detector's response and understanding its behaviour via calibration studies to physics analysis.

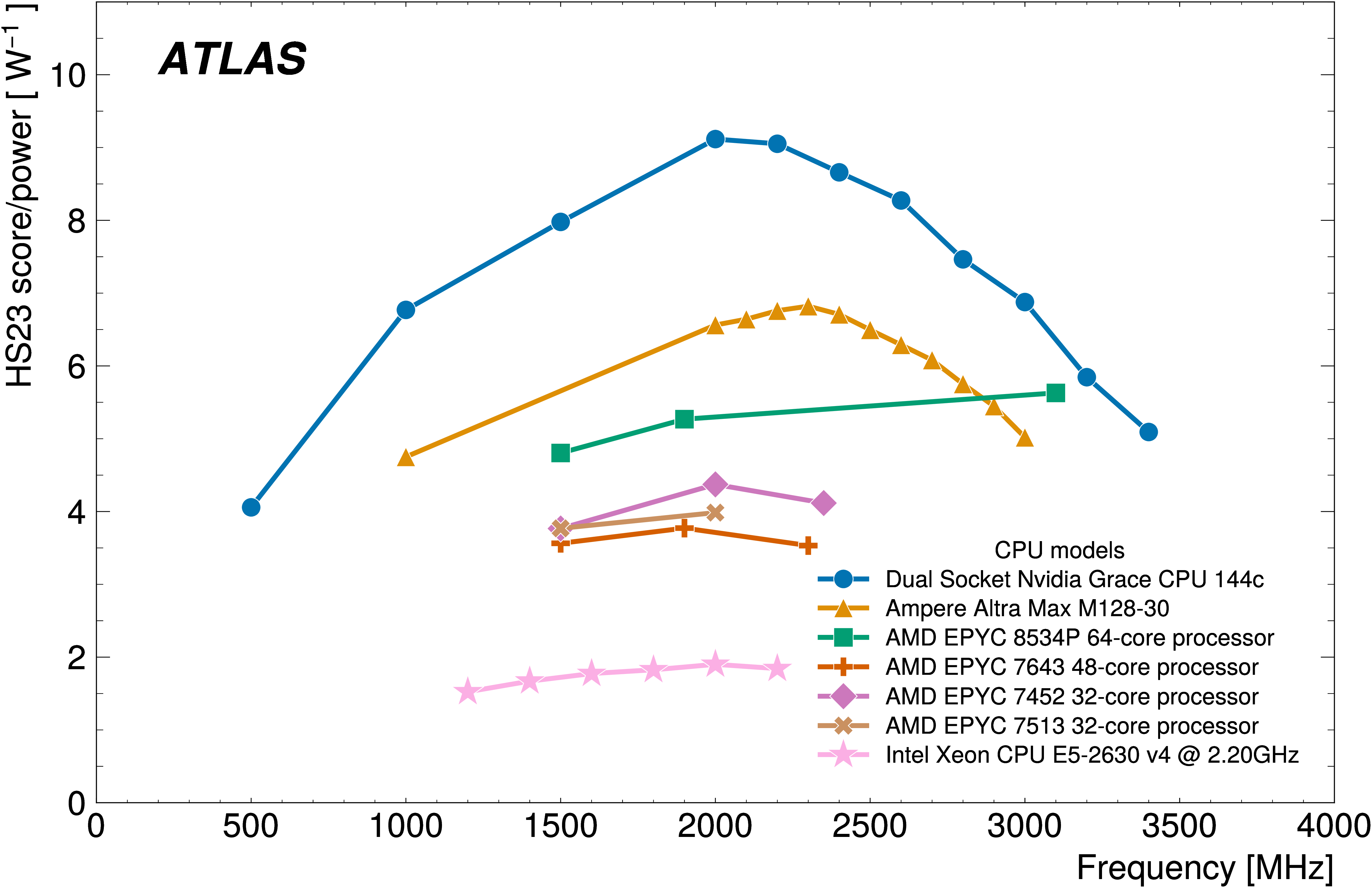

However, the computing world has moved on since Athena was first created. In particular, as Figure 1 shows, there has been a paradigm shift away from increasing computing performance (in blue) by increasing CPU frequency (in green), to instead keeping the frequency constant but increasing the number of computing processors, or cores (in black). This industry-wide shift has affected the processors in our phones, our laptops and desktops, and also the CPUs in the global computing resources that ATLAS relies on, found in data centres scattered all over the world.

But fully exploiting the potential of modern CPUs requires software that supports parallel processing of data, or “multithreading”, something for which Athena was never originally designed. Furthermore, as the LHC beam energy and luminosity has continued to increase, so too has the memory required to process each collision event. As a result, ATLAS was not getting the best CPU possible performance out of its resources, sometimes even keeping cores idle, so as not to run out of memory. For Run 2 of the LHC (2015-2018), ATLAS software developers implemented a more memory efficient multiprocess version of Athena (AthenaMP). Yet looking ahead to the demands of the High-Luminosity LHC (starting in 2028), it was clear that something more drastic needed to be done.

Following several years of development, ATLAS Collaboration has launched a new "multithreaded" release of its analysis software, Athena.

In 2014, the ATLAS Collaboration launched a project to rewrite Athena to be natively multithreaded (AthenaMT). Since writing multithreaded software can be very difficult, they decided from the start that the framework should shield normal developers from the rewrite as much as possible. This involved a dedicated team of core developers from ATLAS (and other Gaudi-using experiments) thinking of new ways to process data. In traditional serial data processing, algorithms are run in a strictly predefined order, processing events one-by-one as they are read from disk. AthenaMT needed to be more flexible, able to process multiple events in parallel while concurrently analysing multiple parts of a collision event at the same time (for example, tracking and calorimetry).

To accomplish this, the team created a “scheduler”, which would look at the required data input for each algorithm and run the algorithms in the order required. For example, if a particular reconstruction algorithm needs certain input, it will not be scheduled to run until the algorithms producing said input have finished. The data input can either be “event data” from the detector readout or “conditions data” about the detector itself (e.g. gas temperature, alignment etc). Figure 2 shows this in practice: each event is a different colour and each shape represents a different algorithm. The scheduler uses available resources to run algorithms as their inputs become available.

In addition to adding new features, such as the scheduler, all of the existing code had to be updated (and simultaneously reviewed) in order to run in multiple threads. Since Athena has more than four million lines of C++ code and over one million lines of Python code, this was an enormous task that involved hundreds of ATLAS physicists and developers over several years. The effort was guided by a core ATLAS software team, who also provided documentation and organised tutorials.

The results have been worth the effort. Figure 3 compares the AthenaMP software used during Run 2 (“release 21”) to the new multithreaded AthenaMT (“release 22”) that will be used in Run 3 (starting next year). From this you can see that the migration to a multithreaded approach (and other changes) has increased the per thread/process memory consumption with respect to the Run-2 software. It also yields dramatic improvements in memory utilisation: an eight-process job using Run-2 software uses about 20 GB of memory, while an eight-thread job using the Run-3 setup uses about 9 GB. This enormous memory reduction comes with no reduction in event throughput – AthenaMT and AthenaMP run at almost exactly the same time per event.

AthenaMT is now ready for Run 3, and is currently being used to re-analyse all of ATLAS’ Run-2 data. While this first stage is complete, the overall mission to modernise Athena is ongoing. How will Athena run on new hardware architectures? And what new reconstruction and simulation techniques might be used? The ATLAS team has already begun investigating exciting options in computing hardware and software development for the future.

Links

- Bringing new life to ATLAS data, ATLAS News

- See also the full lists of ATLAS Conference Notes and ATLAS Physics Papers.